Optimizing Cache Utilization: Leveraging Most Recently Used [MRU] Principles

Most recently used (MRU) caching memory optimization is a strategy used to improve the efficiency and performance of cache memory systems. It is done by prioritizing recently accessed data for retention. This optimization technique is based on the principle that items accessed most recently are likely to be accessed again in the near future which makes them valuable candidates for retention in the cache.

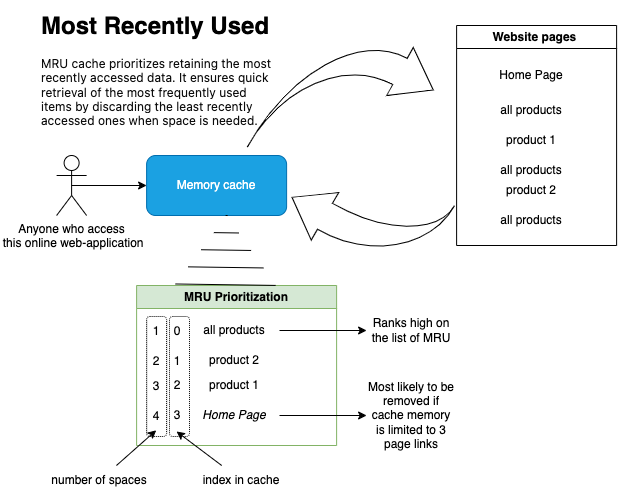

Scenario: Most Recently Used Use-Case

Consider a web browser caching recently visited web pages to speed up browsing. With an MRU caching strategy, each time a user visits a new web page, it is added to the cache as the most recently used page. If the cache reaches its maximum capacity and a new page needs to be added, the least recently used page is evicted from the cache to make space for the new page. This ensures that the most recently accessed pages remain in the cache for faster access during subsequent visits. It finally optimizes the browsing experience for the user.

Here’s a deep explanation of how most recently used caching memory optimization works:

Cache Structure

Like other caching strategies, MRU caching involves maintaining a cache memory alongside the main memory (e.g., RAM) or storage (e.g., disk). The cache is typically implemented as a data structure such as a hash map or a linked list. In this case, each item has a unique key-value pair representing the data to be stored.

Accessing Items

When an item is accessed or retrieved from the cache, it is marked as the most recently used item. If the item is already present in the cache, it is moved to the front of the cache to indicate its recent usage. If the item is not present in the cache, it is fetched from the underlying data source and added to the front of the cache.

Retaining Items

In the MRU cache, when the cache reaches its maximum capacity and a new item needs to be added, the least recently used item is evicted from the cache to make space. Instead of evicting the oldest item as in some other caching strategies (such as least recently used or LRU), the MRU strategy prioritizes retaining the most recently used items, as they are more likely to be accessed again soon.

Updating Item Usage

Each time an item is accessed or retrieved from the cache, it is moved to the front of the cache to indicate its recent usage. This involves updating the pointers or indexes of the cache data structure to place the accessed item at the head (front) of the cache. This ensures that it remains readily accessible for future accesses. — Just like your mobile phone call register

Optimization Benefits

The MRU caching memory optimization strategy offers several benefits for improving cache performance and efficiency. As it prioritizes recently accessed items for retention, it increases the likelihood of cache hits (i.e., finding requested items in the cache).This reduces the frequency of cache misses and the need to fetch data from slower storage devices or remote servers. It thus leads to;

- Faster access times

- Lower latency

- And overall better system performance.